-

Improvement

-

Resolution: Won't Fix

-

Normal

Normal

-

None

-

None

-

None

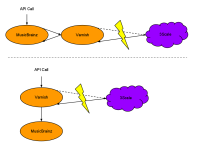

While in SLO we had a call with the 3scale team and they pointed us to a varnish plugin that does exactly the type of stuff that we want to do. We had identified a few possible race conditions and this solution takes care of these race conditions. So, lets move our implementation of the 3scale stuff to use that varnish plugin so we can use off the shelf recommended software.

Finally, having a people make a lot of simultaneous requests needs to be handled in the rate limiter. Please evaluate the current ratelimiter changes proposed with Dave and see if further changes are required. Assign dave MBH tickets as needed.

- depends on

-

MBH-274 Ian needs access to 3scale-vcl git repository

-

- Closed

-